Table of Contents (Start)

SevOne NMS 5.4 Best Practices Guide - Cluster, Peer, and HSA

SevOne NMS Documentation

All SevOne NMS user documentation is available online from the SevOne Support website.

-

Enter email address and password.

-

Click Login.

-

Click the Solutions icon.

© Copyright 2015, SevOne Inc. All rights reserved. SevOne, SevOne PAS, SevOne DNC, Deep Flow Inspection, and Rethink Performance are either registered trademarks or trademarks of SevOne Inc. Other brands, product, service and company names mentioned herein are for identification purposes only and may be trademarks of their respective owners.

Introduction

This document provides conceptual information about your SevOne cluster implementation, peers, and Hot Standby Appliance (HSA) technologies. The intent is to help you prepare and troubleshoot a multi-appliance SevOne NMS cluster. Please see the SevOne NMS Installation and Implementation Guide for how to rack up SevOne appliances and to begin using SevOne Network Management System (NMS).

SevOne Scalability

SevOne NMS is a scalable system that is able to suit any network needs. SevOne appliances work together in an environment that uses a transparent peer-to-peer technology. You can peer multiple SevOne appliances together to create a SevOne cluster that can monitor an unlimited number of network objects and flow data. There is no limit to the number of peers that can work together in a cluster.

There are several models of the appliances that run the SevOne software.

-

PAS - Performance Appliance System (PAS) appliances can be rated to monitor up to 200,000 network objects.

-

DNC - Dedicated NetFlow Collector (DNC) appliances monitor flow technologies.

-

HSA - Hot Standby Appliances (HSA) enable you to create a pair of appliances that act as one peer to provide redundancy.

-

PLA – Performance Log Appliance (PLA) provides deep insight into the performance of both hardware and the services or applications running above.

SevOne appliances scale linearly to monitor the world's largest networks. Each SevOne appliance is both a collector and a reporter. In a multi-peer cluster, all appliances are aware of which peer monitors each device and can efficiently route data requests to the user. The system is flexible enough to enable indicators from different devices to appear on the same graph when different SevOne NMS peers monitor the devices.

The peer-to-peer architecture not only scales linearly, without loss of efficiency as you add additional peers, but actually gets faster. When a SevOne NMS peer receives a request from one of its peers, it processes the request locally and sends the requesting peer only the data it needs to complete the report. Thus if a report spans multiple peers, they all work on the report simultaneously to retrieve the results much faster than any single appliance can.

You can create redundancy for each peer with a Hot Standby Appliance that works in tandem with the active appliance to create a peer appliance pair. If the active appliance in the Hot Standby Appliance peer pair fails, the passive appliance in the peer pair takes over. The passive appliance in the Hot Standby Appliance peer pair maintains a redundant copy of all poll data and configuration data for the peer pair. If a failover occurs, the transition from passive appliance to active appliance is transparent to all users and all polling and reporting continues seamlessly.

Each SevOne cluster has a Cluster Master peer. The cluster master peer is the SevOne appliance that stores the master copy of Cluster Manager settings, security settings, and other global settings in the config database. All other active peers in your SevOne cluster pull the configuration data from the cluster master peer config database. Some security settings and device edit functions are dependent upon the communication between the active peers in the cluster and the cluster master peer. The cluster master appliance hardware and software is no different from any other peer appliance so you can designate any appliance to be the cluster master. Considerations for the cluster master are geographic location and data center stability that affect latency and power supply which should be considered for any computer network implementation.

From the user point of view, there is only one peer because each peer in the cluster presents all data, no matter which peer collects it, with no reference to the peer that collects the data. You can log on to any SevOne NMS peer in your cluster and retrieve data that spans any or all devices in your network.

All SevOne communication protocols assume a single hop adjacency which means that a request or replication must be directly sent to the IP address of the destination SevOne peer and cannot be routed in any way by the members of the cluster.

All peers must be able to communicate with the cluster master peer but the SevOne cluster is designed to be able to gracefully operate in a degraded state when one or more of the peers are disconnected from the rest of the cluster.

-

A peer that cannot communicate with the cluster master peer can run reports that return results for all data available on the peers with which it maintains communication.

-

A peer that cannot communicate with the cluster master cannot make any configuration changes for the duration of being disconnected.

-

The peers in the cluster that remain in communication with the cluster master can make configuration changes and can run report for all data available on peers with which they maintain communication.

There are two types of SevOne cluster deployment architectures:

-

Full Mesh - All peers can talk to each other. Recommended for enterprises and service providers to monitor their internal network.

-

Hub and Spoke - All peers can talk to the cluster master and you can define subsets of the other interconnected peers. Useful for Managed Service Providers. Hub and spoke implementations provide the following:

-

Reports run from the cluster master peer return fully global result sets.

-

Reports run from a peer in a partially connected subset of peers return result sets from itself, the cluster master peer, and any other peers with which the peer can directly communicate.

-

Most configuration changes at both the peer level and the cluster level can be accomplished from all peers.

-

Updates and other administrative functions that affect ALL peers must be run from the cluster master peer.

-

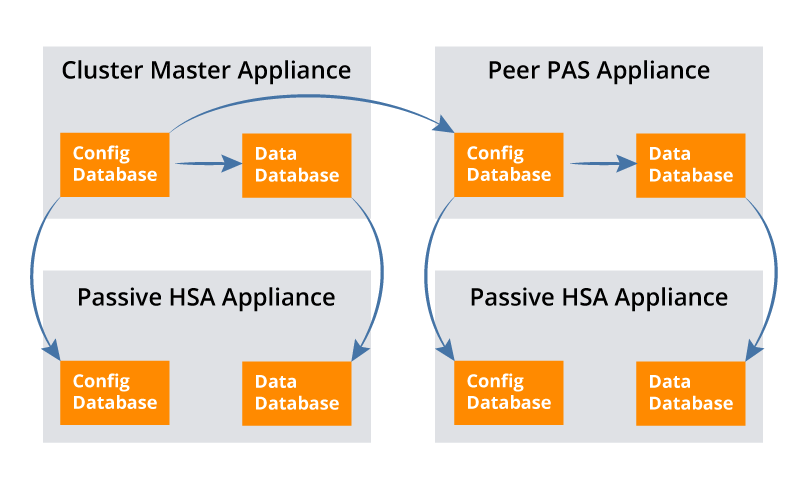

Database Replication Explanation

The SevOne NMS application peer-to-peer architecture has two fundamental databases.

-

Config Database - The config database stores configuration settings such as cluster settings, security settings, device settings, etc. SevOne NMS saves the configuration settings you define (on any peer in the cluster) in the config database on cluster master peer. All active appliances in the cluster pull config database changes from the cluster master peer's config database. Each passive appliance in a Hot Standby Appliance peer pair pulls its active appliance's config database to replicate the config database onto the passive peer.

-

Data Database - The data database stores a copy of the config database plus all poll data for the devices/objects that the peer polls. The config database on an active appliance replicates to the data database on the appliance. Each passive appliance in a Hot Standby Appliance peer pair pulls the active appliance's data database to replicate the data database on the passive appliance.

Cluster Database Replication

Database Replication

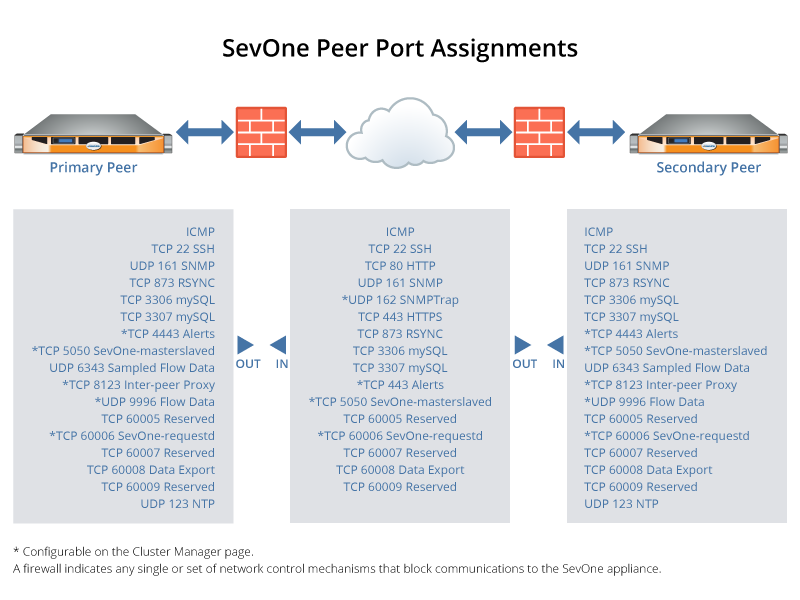

Peer Ports

SevOne peers communicate with each other to maintain a consistent environment. Each peer needs the following ports open between each other:

Inter-PAS Connectivity Ports

-

ICMP – Inter-peer Monitoring**

-

TCP 22 - SSH Access

-

TCP 80 – HTTP, SOAP API, and AJAX Calls

-

UDP 161 - SNMP Inter-peer Monitoring

-

UDP 162 – SNMP Trap Inter-peer Monitoring

-

TCP 443 - HTTPS**

-

TCP 873 - RSYNC

-

TCP 3306 – MySQL**

-

TCP 3307 - MySQL2**

-

TCP 4443 – Alert Notification**

-

TCP 5050 - SevOne-masterslaved**

-

UDP 6343 – sFlow and Sampled Flow Data

-

TCP 8123 Inter-peer Proxy VMware vCenter

-

UDP 9996 – Flow Data

-

TCP 60005 - Reserved

-

TCP 60006 - SevOne-requestd**

-

TCP 60007 - Reserved

-

TCP 60008 – Raw Data Export – SevOne Raw Data Feed

-

TCP 60009 - Reserved

-

UDP 123 - NTP Inter-peer Time Sync

**Required

Manage Peers

In a single appliance/single peer cluster implementation, all relevant information (cluster master, discovery, alerts, reports, etc.) are all local to the one appliance. For a single peer cluster the SevOne appliance ships with the following configuration:

-

Name: SevOne Appliance

-

Master: Yes

-

IP: 127.0.0.1

In a multi-peer cluster implementation, one appliance in the cluster is the cluster master peer. The cluster master peer receives configuration data such as cluster settings, security settings, device peer assignments, archived alerts, and flow device lists. The cluster master peer software is identical to the software on all other peers which enables you to designate any SevOne NMS appliance to be the cluster master peer. After you determine which peer is to be the cluster master that peer remains the master of the cluster and only SevOne Support Engineers can change the cluster master peer designation.

All active peers in the cluster replicate the configuration data from the cluster master peer. All active SevOne NMS appliances in a cluster are peers of each other. One of the appliances in a Hot Standby Appliance peer pair is a passive peer. See the Hot Standby Appliances chapter for how to include Hot Standby Appliance peer pairs in your cluster.

All active peers perform the following actions.

-

Provide a full web interface to the entire SevOne NMS cluster.

-

Update the cluster master peer with configuration changes.

-

Pull config database changes from the cluster master peer.

-

Communicate with other peers to get non-local data.

-

Discover/delete the devices you assign to the peer.

-

Poll data from the objects and indicators on the devices you assign to the peer.

When you add a network device for SevOne NMS to monitor, you assign the device to a specific SevOne NMS peer. The assigned peer lets the cluster master know that it (the assigned peer) is responsible for the device. The cluster master maintains the device assignment list in the config database which is replicated to the other peers in the cluster. From this point forward, all modifications to the device, including discovery, polling, and deletion are the responsibility of the SevOne NMS peer to which you assign the device. The peer collects and stores all data for the device. This is in direct contrast to the way that other network management systems collect data. They collect data remotely and then ship the data back to a master server for storage. SevOne NMS stores all local data on the local peer which enables for greater link efficiency and speed.

Add Peers

For a new appliance, follow the steps in the SevOne NMS Installation and Implementation Guide to rack up the appliance and to assign the appliance an IP address. When you log on to the new appliance, the Startup Wizard appears. On the Startup Wizard, select the Add This Appliance to an Existing SevOne NMS Cluster and click Next to navigate to the Cluster Manager at the appliance level on the Integration tab.

You can access the Cluster Manager from the navigation bar. Click the Administration menu and select Cluster Manager. From the Cluster Manager, click  in the cluster hierarchy on the left side next to the peer to add/move to display the peer's IP address. Click on the IP address and then select the Integration tab on the right side.

in the cluster hierarchy on the left side next to the peer to add/move to display the peer's IP address. Click on the IP address and then select the Integration tab on the right side.

Notes:

-

All data on this peer/appliance will be deleted.

-

You need to know the name of this peer (appears in the cluster hierarchy on the left side of the Cluster Manager).

-

You need to know the IP address of this appliance (appears in the cluster hierarchy on the left side of the Cluster Manager).

-

You need to be able to access the Cluster Manager on a SevOne NMS peer that is already in the cluster to which you intend to add this appliance.

-

If you do not complete the steps within ten minutes, you must start again at step 1) Click Allow Peering … on this tab to queue this appliance for peering within the following ten minutes.

After you click Allow Peering on this tab, you will have ten minutes to perform the following steps from a peer that is already in the cluster to which you intend to add this peer/appliance.

-

Click Allow Peering on the Integration tab on the appliance you are adding.

-

Log on to the peer in the destination cluster as a System Administrator.

-

Go to the Cluster Manager (Administration > Cluster Manager).

-

At the Cluster level select the Peers tab.

-

Click Add Peer to display a pop-up.

-

Enter the Peer Name and the IP Address of the peer/appliance you are adding.

-

On the pop-up, click Add Peer:

-

All data on the peer/appliance you are adding is deleted.

-

Do not do anything on the peer you are adding until a Success message appears on the peer on which you click Add Peer.

-

You can continue working and performing business as usual on all peers that are already in the cluster.

-

The new peer appears on the Peers tab in the destination cluster with a status message. Click Refresh to update the status.

-

The new peer appears in the cluster hierarchy on the left.

-

-

After the Success message appears on the peer in the destination cluster, you can go the peer you just added and the entire cluster hierarchy to which you added the peer should appear on the left.

-

You can use the Device Mover to move devices to the new peer.

If the integration fails, click View Cluster Logs on the Peers tab on the peer that is in the destination cluster to display a log of the integration messages.

Click Clear Failed to remove failed attempts from the list. Failed attempts are not automatically removed from the list which enables you to navigate away from Peers tab during the integration.

SevOne-peer-add

The SevOne-peer-add command line script enables you to add a peer to your SevOne cluster. The new peer must be a clean box that contains no data in the data database or the config database.

-

Follow the steps in the SevOne NMS Installation and Implementation Guide to rack up the appliance and to perform the steps in the config shell to give the peer an IP address.

-

On the cluster master peer, access the command line prompt and enter: SevOne-peer-add

-

Follow the prompts to enter the new peer's IP address and the standard SevOne internal password.

Hot Standby Appliances

SevOne appliances can collect gigabytes of data each day. You can create redundancy for each appliance with a Hot Standby Appliance to create a peer pair so if the active appliance in the peer pair fails there is no significant loss of poll data. The passive appliance in the peer pair assumes the role of the active appliance in the peer pair. Having a peer pair in a peer to peer cluster implementation has its own terminology.

Terminology

-

Primary Appliance - Implemented to be the active, normal, polling appliance. If the primary appliance fails, it is still the primary appliance but it becomes the passive appliance.

-

Secondary Appliance - Implemented to be the passive appliance in a Hot Standby Appliance peer pair. If the active appliance fails, it is still the secondary appliance but it assumes the active role.

-

Active Appliance - The currently polling appliance. Upon initial setup the primary appliance is the active appliance. If the primary appliance fails, the secondary appliance becomes the active appliance in the peer pair.

-

Passive Appliance - The appliance that currently replicates from the active appliance. Upon initial setup the secondary appliance is the passive appliance.

-

Neighbor - The other appliance in the peer pair. The primary appliance's neighbor is the secondary appliance and vice versa.

-

Fail Over – From the perspective of the active appliance, fail over occurs when the currently passive appliance assumes the active role.

-

Take Over – From the perspective of the passive appliance, take over occurs when the passive appliance assumes the active role.

-

Split Brain – When both appliances in a peer pair are active or both appliances in a peer pair are passive this is known as split brain. Split brain can occur when the communication between the appliances is interrupted and the passive appliance becomes active. When an administrative user logs on, an administrative message appears to let you know that the split brain condition exists. See the Troubleshoot Cluster Management chapter for details.

Both appliances in the peer pair should have the same hardware/software configuration to prevent performance problems in the event of a failover (for example, a 60K HSA for a 60K PAS). Each Hot Standby Appliance peer pair can have only the two appliances in the peer pair. A Hot Standby Appliance cannot have a Hot Standby Appliance attached to it.

In a Hot Standby Appliance peer pair implementation, the role of the active appliance in the peer pair is to:

-

Provide a full web interface to the entire SevOne NMS cluster.

-

Update the cluster master peer with configuration changes.

-

Pull config database changes from the cluster master peer.

-

Communicate with other peers to get any non-local data.

-

Discover/delete the devices you assign to the peer.

-

Poll data from the assigned devices.

The role of the passive appliance in the peer pair is to:

-

Replicate the config database information and the data database information from the active appliance.

-

Switch to the active role when the current active appliance is not reachable.

An HSA implementation creates an appliance relationship that is designed to have the passive appliance receive a continuous stream of database replication (commonly known as binary logs of database actions) from the active appliance neighbor. The passive appliance replays this stream and makes precisely the same changes that the active appliance made (i.e., record collected data, add a new device configuration, delete a monitored device and all historic data, etc.). The passive appliance performs a continuous heartbeat check to the active appliance. In the event that the passive appliance determines that the active appliance's heartbeat has gone away for any reason for a specified time period (referred to as the minimum dead time), the passive appliance assumes that the active appliance has in deed suffered a catastrophic failure and the passive appliance takes over polling at that point for its respective devices and notifies the cluster master that it has taken over for what was the active appliance.

The passive appliance in the Hot Standby Appliance peer pair can take over for the active appliance at any time. Any changes you make to the active appliance (including settings updates, new polls, or device deletions) are replicated to the passive appliance as frequently as possible.

Hot Standby Appliance Peer Pair Ports

The primary appliance and the secondary appliance in a Hot Standby Appliance peer pair need to communicate with each other to maintain a consistent environment. The appliances need to have the following ports open between each other:

-

TCP 3306 - MySQL replication

-

TCP 3307 - MySQL replication

-

TCP 5050 - Active/passive communication

Add an Appliance to Create a Hot Standby Appliance Peer Pair

There are two ways to implement a Hot Standby Appliance peer pair: VIP and non-VIP. Each has its advantages and disadvantages, and each works best in certain implementations.

Virtual IP Configuration (VIP Configuration)

VIP Configuration requires each appliance to have two Ethernet cards and a dedicated IP address. The two appliances share a virtual IP address which is the IP address of the appliance that is currently active. This works when the two appliances are on the same subnet.

Advantages

-

Transparent to the end user; if a failover occurs, the IP address does not change.

-

Appliances are not separated by a firewall.

-

Replicated data is restricted to a subnet.

Disadvantages

-

Requires three IP addresses.

-

Only works if the two appliances are in the same subnet. Barring complicated routing this setup generally means that the two appliances are close to each other so if the building goes down, both appliances go down.

In a VIP configuration the access lists necessary for SNMP, ICMP, port monitoring, and other polled monitoring are only set for the virtual IP address.

VIP Configuration requires you to setup two interfaces.

-

eth0: - This is the virtual interface and should be the same on both appliances. This interface is brought up and down appropriately by the system.

-

eth1: - This is the administrative interface for the appliance. The system does not alter this interface.

The Cluster Manager provides a Peer Settings tab to enable you to view the Primary, Secondary, and Virtual IP addresses in a VIP configuration Hot Standby Appliance peer pair.

-

Primary Appliance IP Address - The administrative IP address for the primary appliance (eth1).

-

Secondary Appliance IP Address - The administrative IP address for the secondary appliance (eth1).

-

Virtual IP Address - The virtual IP address for the peer pair (eth0).

Non-VIP Configuration

Non-VIP Configuration requires each appliance to have an IP address and be able to communicate with each other. In the event of a failover or takeover when the appliances switch roles the IP address of the peer pair changes accordingly.

Advantages

-

Requires two IP addresses.

-

Appliances do not need to be on the same subnet.

Disadvantages

-

IP address of peer pair changes when the appliances failover.

You need to be aware that you must check two IP addresses or include a DNS load balancer to update the DNS record.

-

A firewall separates the appliances.

-

Data replicates across a WAN.

In a non-VIP configuration all access lists need to include the IP addresses of both appliances in the event of a failover.

Non-VIP Configuration requires you to setup only one interface.

-

eth0: - This is the administrative interface for the appliance. The system does not alter this interface.

The Cluster Manager provides a Peer Settings tab to enable you to view the Primary and Secondary IP addresses in a non-VIP configuration Hot Standby Appliance peer pair.

-

Primary Appliance IP Address - The administrative IP address for the primary appliance (eth0).

-

Secondary Appliance IP Address - The administrative IP address for the secondary appliance (eth0).

-

Virtual IP Address - (this field should be blank).

Note: If you do not specify either the primary appliance IP address or the secondary appliance IP address, no active/passive actions take place.

SevOne-hsa-add

The SevOne-hsa-add command line script enables you to add a Hot Standby Appliance to create a peer pair in your SevOne cluster. The Hot Standby Appliance must be a clean box that contains no data in the data database or the config database.

-

Follow the steps in the SevOne NMS Installation and Implementation Guide to rack up the appliance and to perform the steps in the config shell to give the Hot Standby Appliance an IP address.

-

On the Primary appliance in the peer pair access the command line prompt and enter: SevOne-hsa-add

-

Follow the prompts to enter the Hot Standby Appliance's IP address and the standard SevOne internal password.

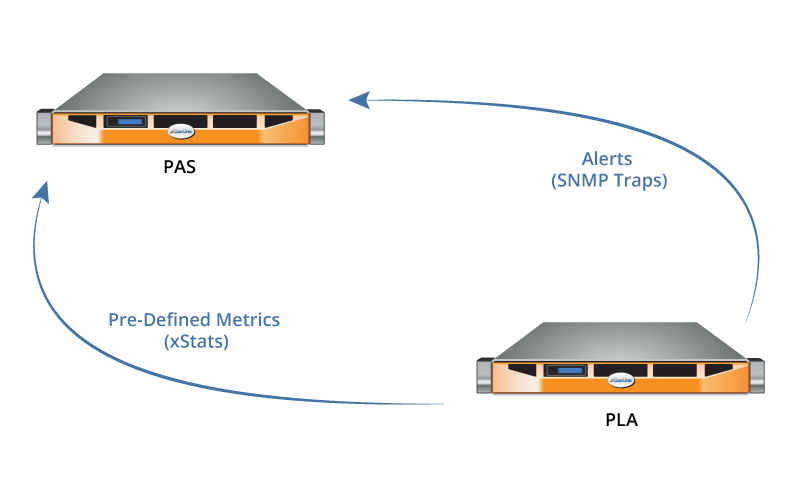

Performance Log Appliance

The SevOne PLA provides real-time analytics on large volumes of unstructured log data. This enables a better understanding of the behavior of users, customers, applications, and network and IT infrastructure. The SevOne PLA makes it easy to identify problems and eliminates the need to dig through log data. The Performance Log Appliance (PLA) enables access to SevOne PLA log analytics software to grant users the benefit of the automatic collection and organization of log data to better provide a detailed picture of user and machine behavior. Log analysis provides deep insight into the performance of both hardware and the services or applications that run above.

Due to the sheer volume of log data, the Performance Log Appliance is a separate appliance in your SevOne cluster. The SevOne PLA software is loaded on a Performance Log Appliance.

The SevOne PLA appliance forwards SNMP traps to a SevOne PAS appliance and imports pre-defined metrics into the PAS via xStats.

PLA & PAS Integration

Data Flow

SevOne PLA generates SNMP traps and pushes the alerts it generates to SevOne NMS, regardless of whether or not the device is polled and monitored in SevOne NMS. The certification of a SevOne PLA application key determines the metrics that are pushed into or are collected by SevOne NMS.

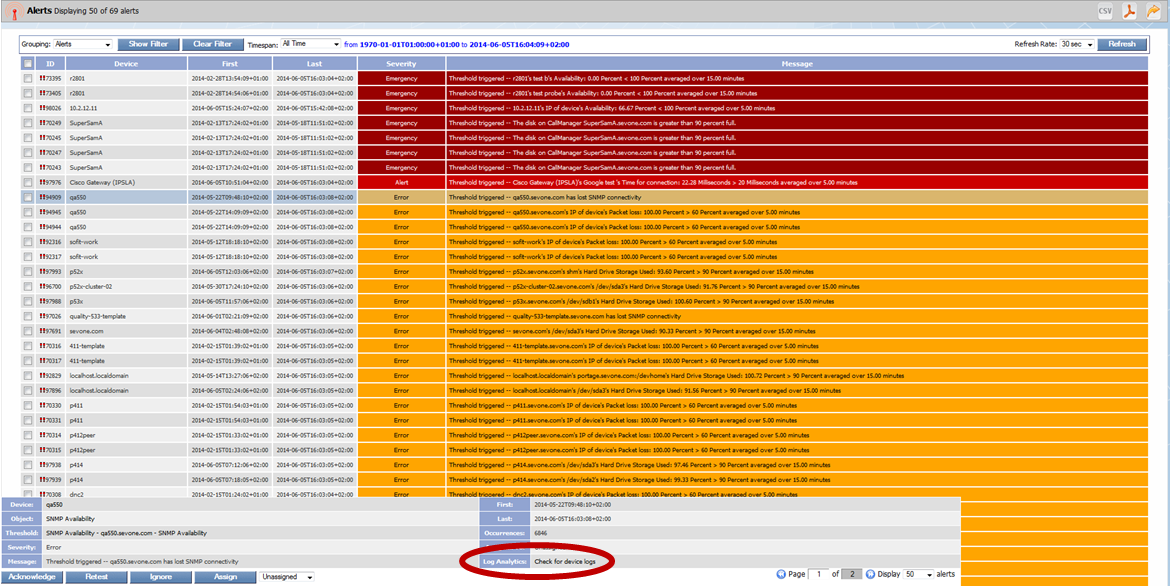

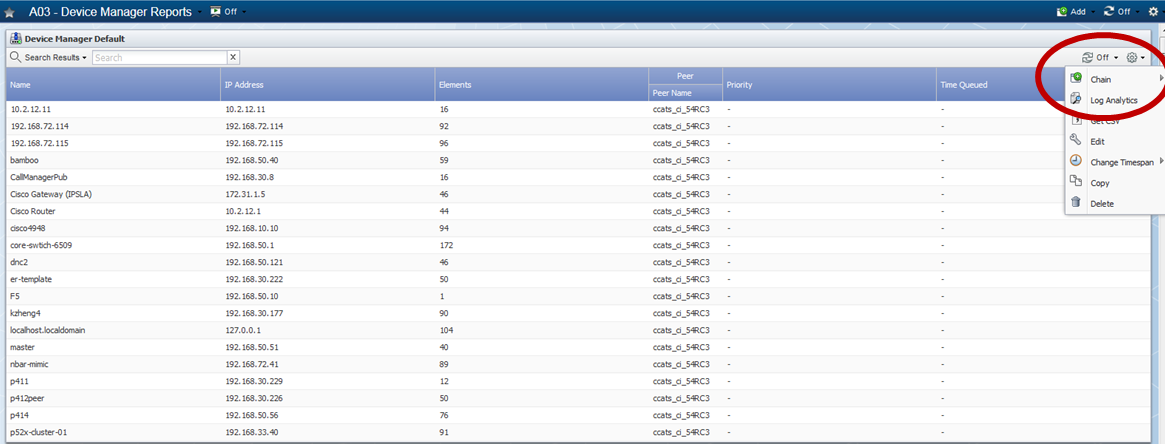

Click Through from SevOne NMS to SevOne PLA Data

In SevOne NMS you can access log data from the Alerts page.

Alerts click through to log data

In SevOne NMS you can access log data from report attachments.

Report attachment click through to log data

The Performance Log Appliance SevOne PLA software enables you to monitor log data independently from the SevOne NMS software. See the SevOne Performance Log Appliance Administration and User Guide for details.

Troubleshoot Cluster Management

The Cluster Manager displays statistics and enables you to define application settings. The Cluster Manager enables you to integrate additional SevOne NMS appliances into your cluster, to resynchronize the databases, and to change the roles of Hot Standby Appliances (fail over, take over, rectify split brain). The following is a subsection of the comprehensive Cluster Manager documentation. See the SevOne NMS System Administration Guide for additional details.

To access the Cluster Manager from the navigation bar, click the Administration menu and select Cluster Manager.

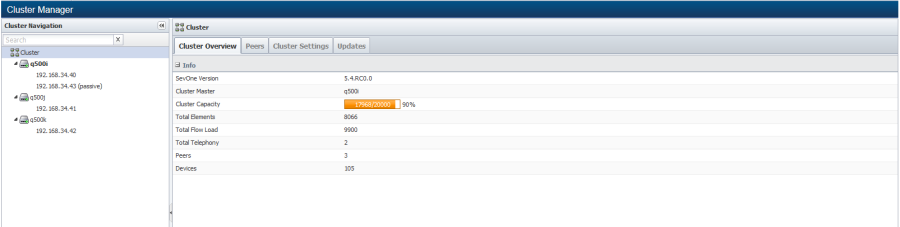

Cluster Manager - Cluster Level - Cluster Overview tab

The left side enables you to navigate your SevOne NMS cluster hierarchy. When the Cluster Manager appears, the default display is the Cluster level with the Cluster Overview tab selected.

-

Cluster Level - The Cluster level enables you to view cluster wide statistics, to view statistics for all peers in the cluster and to define cluster wide settings.

Cluster Level - The Cluster level enables you to view cluster wide statistics, to view statistics for all peers in the cluster and to define cluster wide settings. -

Peer Level - The Peer level enables you to view peer specific information and to define peer specific settings. The cluster master peer name displays at the top of the peer hierarchy in bold font and the other peers display in alphabetical order.

Peer Level - The Peer level enables you to view peer specific information and to define peer specific settings. The cluster master peer name displays at the top of the peer hierarchy in bold font and the other peers display in alphabetical order. -

Appliance Level - Click to display appliance level information including database replication details. Each Hot Standby Appliance peer pair displays the two appliances that act as one peer in the cluster. The appliance level provides an Integration tab to enable you to add a new peer to your cluster.

Appliance Level - Click to display appliance level information including database replication details. Each Hot Standby Appliance peer pair displays the two appliances that act as one peer in the cluster. The appliance level provides an Integration tab to enable you to add a new peer to your cluster.

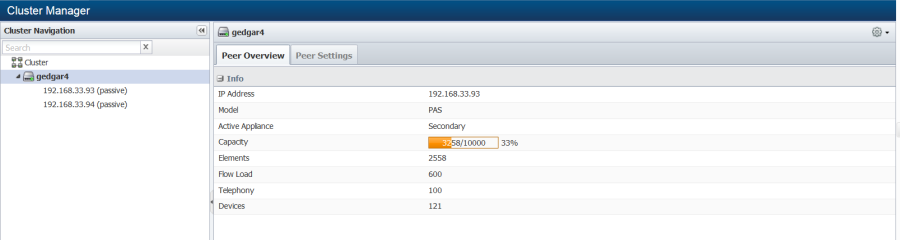

Peer Level Cluster Management

Peer Level – At the Peer level, on the Peer Settings tab, the Primary/Secondary subtab enables you to view the IP addresses for the two appliances that act as one SevOne NMS peer in a Hot Standby Appliance (HSA) peer pair implementation. The Primary appliance is initially set up to be the active appliance. If the Primary appliance fails, it is still the Primary appliance but its role changes to the passive appliance. The Secondary appliance is initially set up to be the passive appliance. If the Primary appliance fails, the Secondary appliance is still the Secondary appliance but it becomes the active appliance. You define the appliance IP address upon initial installation and implementation. See the SevOne NMS Installation and Implementation Guide for details.

Peer Level – At the Peer level, on the Peer Settings tab, the Primary/Secondary subtab enables you to view the IP addresses for the two appliances that act as one SevOne NMS peer in a Hot Standby Appliance (HSA) peer pair implementation. The Primary appliance is initially set up to be the active appliance. If the Primary appliance fails, it is still the Primary appliance but its role changes to the passive appliance. The Secondary appliance is initially set up to be the passive appliance. If the Primary appliance fails, the Secondary appliance is still the Secondary appliance but it becomes the active appliance. You define the appliance IP address upon initial installation and implementation. See the SevOne NMS Installation and Implementation Guide for details.

-

The Primary Appliance IP Address field displays the IP address of the primary appliance.

-

The Secondary Appliance IP Address field displays the IP address of the secondary appliance.

-

The Virtual IP Address field appears empty unless you implement the primary appliance and the secondary appliance to share a virtual IP address (VIP HAS implementation).

-

The Failover Time field enables you to enter the number of seconds for the passive appliance to wait for the active appliance to respond before the passive appliance takes over. SevOne NMS pings every 2 seconds and the timeout for a ping is 5 seconds.

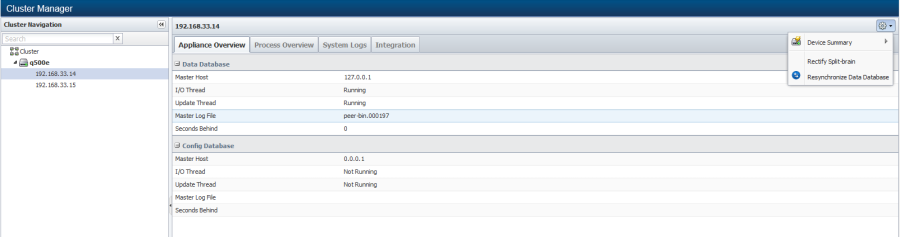

Appliance Level Cluster Management

Appliance Level – At the Appliance level the appliance IP address displays. For a Hot Standby Appliance peer pair implementation two appliances appear.

Appliance Level – At the Appliance level the appliance IP address displays. For a Hot Standby Appliance peer pair implementation two appliances appear.

-

The Primary appliance appears first in the peer pair.

-

The Secondary appliance appears second in the peer pair.

-

The passive appliance in the peer pair displays (passive).

-

The active appliance that is actively polling does not display any additional indicators.

Click on an appliance and  appears above the right side on the Cluster Manager. Click

appears above the right side on the Cluster Manager. Click  to display options that are dependent on the appliance you select in the hierarchy on the left side.

to display options that are dependent on the appliance you select in the hierarchy on the left side.

-

Select Device Summary to access the Device Summary for the appliance. When there are report templates that are applicable for the device, a link appears to the Device Summary along with links to the report templates.

-

Select Fail Over to have the active appliance in a Hot Standby Appliance peer pair become the passive appliance in the peer pair. This option appears when you select the active appliance in a Hot Standby Appliance peer pair in the hierarchy.

-

Select Take Over to have the passive appliance in a Hot Standby Appliance peer pair become the active appliance in the peer pair. This option appears when you select the passive appliance in a Hot Standby Appliance peer pair in the hierarchy.

-

Select Resynchronize Data Database to have an active appliance pull the data from its own config database to its data database or to have the passive appliance in a Hot Standby Appliance peer pair pull the data from the active appliance's data database. This is the only option that appears when you select the cluster master peer's appliance in the hierarchy.

-

Select Resynchronize Config Database to have an active appliance pull the data from the cluster master peer's config database to the active peer's config database or to have the passive appliance in a Hot Standby Appliance peer pair pull the data from the active appliance's config database.

-

Select Rectify Split Brain to rectify situations when both appliances in a Hot Standby Appliance peer pair think they are active or both appliances think they are passive. See the Split Brain Hot Standby Appliances chapter for details.

Appliance Overview

Click  next to a peer in the cluster hierarchy on the left side, click on the IP address of an appliance, and then select the Appliance Overview tab on the right side to display appliance level information.

next to a peer in the cluster hierarchy on the left side, click on the IP address of an appliance, and then select the Appliance Overview tab on the right side to display appliance level information.

Data Database Information

-

Master Host - Displays the IP address of the source from where the appliance replicates the data database. In a single appliance implementation and on an active appliance, this is the IP address of the appliance itself. HSA passive appliance data database replicates from the active appliance data database.

-

I/O Thread - Displays Running when an active appliance is querying its config database for updates for the data database. Displays Not Running when the appliance is not querying the config database. HSA passive appliance data database queries the active appliance data database.

-

Update Thread - Displays Running when the appliance is in the process of replicating the config database to the data database. Displays Not Running when the appliance is not currently replicating to the data database.

-

Master Log File - Displays the name of the log file the appliance reads to determine if it needs to replicate the config database to the data database.

-

Seconds Behind - Displays 0 (zero) when the data database is in sync with the config database or displays the number of seconds that the synchronization is behind.

Config Database Information

-

Master Host - Displays the IP address of the source from where the appliance replicates the config database. In a single appliance implementation and on the master appliance, this is the IP address of the appliance itself. HSA passive appliance config database replicates from the active appliance config database.

-

I/O Thread - Displays Running when an active appliance is querying the master peer config database for updates. Displays Not Running when the appliance is not querying the master peer config database. HSA passive appliance config database queries the active appliance config database.

-

Update Thread - Displays Running when the appliance is in the process of replicating the config database. Displays Not Running when the appliance is not replicating the config database.

-

Master Log File - Displays the name of the log file the appliance reads to determine if it needs to replicate the config database.

-

Seconds Behind - Displays 0 (zero) when the config database is in sync with the master peer config database or displays the number of seconds that the synchronization is behind.

Split Brain Hot Standby Appliances

The typical split brain is due to a fail over and then a fail back where the appliance that was active goes down and then comes back up again as the active appliance. The lack of communication from the active appliance causes the passive appliance to become the active appliance, which makes both appliances in the Hot Standby Appliance pair active. A split brain can also be when both appliances are passive.

When a user with an administrative role logs on to SevOne NMS and there is a Hot Standby Appliance peer pair that is in a split brain state, an administrative message appears.

-

Neither appliance in your Hot Standby Appliance peer pair with IP addresses <n> and <n> is in an active state.

-

Both appliances in your Hot Standby Appliance peer pair with IP addresses <n> and <n> are either active or both appliances are passive.

There are two methods to resolve a split brain.

-

SevOne NMS User Interface - Cluster Manager

-

Command Line Interface

SevOne NMS User Interface

The Cluster Manager displays the cluster hierarchy on the left and enables you to rectify split brain occurrences. From the navigation bar click the Administration menu and select Cluster Manager to display the Cluster Manager.

In the cluster hierarchy, click on the name of the peer pair that is the Hot Standby Appliance peer pair to display the two IP addresses of the two appliances that make up the peer pair. The Primary appliance displays first.

-

In a split brain situation where both appliances are active, neither appliance displays Passive.

-

In a split brain situation where both appliances are passive, both appliances display Passive.

Cluster Manager – Appliance Level – Split Brain – Both Passive

Select one of the affected appliances in the hierarchy on the left side. Click the  to display a Rectify Split Brain option.

to display a Rectify Split Brain option.

-

When both appliances think they are passive and you select this option, the appliance for which you select this option becomes the active appliance in the Hot Standby Appliance peer pair.

-

When both appliances think they are active and you select this option, the appliance for which you select this option becomes the passive appliance in the Hot Standby Appliance peer pair.

Cluster Manager – Appliance Level – Split Brain - Both Active

Example: Select 192.168.33.14 and click Rectify Split Brain to make 192.168.33.14 the passive appliance when both appliances are active.

Command Line Interface Method

Perform the following steps to fix a split brain situation from the command line interface.

-

Log on to the peer that is the Secondary appliance that is in active/master mode.

-

Enter masterslaveconsole

-

When in masterslaveconsole you can enter Help for a list of commands.

-

To check the appliance type, enter GET TYPE.

-

To check the appliance status, enter GET STATUS.

-

-

To make the appliance passive, enter the following command BECOME SLAVE.

-

After you run the command, enter GET JOB STATUS to check the status. This tells you if the process is still running and for how long.

After the process completes, check SevOne-masterslave-status to confirm that the changes were made and that the appliances are in their original configuration.